Fingerprint Individuality Assessment Featured in May Issue

Hugh A. Chipman, Technometrics Editor

The fingertip pattern of an individual is unique to that person. This is the central premise of fingerprint-based authentication systems. In practice, various sources of variability can confound this uniqueness information, leading to erroneous decisions. A central problem in fingerprint analysis is to determine the amount of information in a fingerprint and assess the extent of uniqueness. These problems can be addressed by eliciting statistical models that adequately capture the different sources of variability.

In “Assessing Fingerprint Individuality Using EPIC: A Case Study in the Analysis of Spatially Dependent Marked Processes,” Chae Yong Lim and Sarat Dass develop statistical models for fingerprint features called “minutiae” that exhibit certain distributional characteristics, such as clustering tendencies and spatial dependence. Inference methodology is developed for these models and is used for quantifying the extent of uniqueness in a pair of fingerprints.

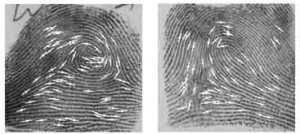

A salient characteristic of fingerprint images is the smooth flow-like patterns with alternating dark and light lines, termed as ridges and valleys, over the entire fingerprint domain. Occasionally, the ridges abruptly end or bifurcate, and these anomalous terminations and bifurcations are termed as minutiae. Minutiae information consists of the location and orientation of the ridge anomaly. The picture above illustrates different ridge flow patterns and minutiae clustering characteristics for two fingerprints. White squares and lines denote minutiae locations and orientations, respectively. A high number of minutiae matches would provide support for a genuine match.

By viewing the minutiae information as a marked point process in two dimensions (with orientations as the marks), the paper develops novel point process models with spatially dependent mark distributions, using them to assess individuality. Are the two fingerprints shown a genuine match? Check out this featured article to find out.

The issue features several novel statistical methods inspired by interesting and challenging applications. For example, motivated by a design and analysis problem relating to the formulation of a new potato crisp, Lulu Kang, V. Roshan Joseph, and William A. Brenneman develop “Design and Modeling Strategies for Mixture-of-Mixtures Experiments.” In mixture-of-mixtures experiments, major components are themselves mixtures of some other components, called minor components. Sometimes, components are divided into different categories where each category is called a major component and the components within a major component become minor components. The special structure of the mixture-of-mixtures experiment makes design and modeling different from a typical mixture experiment. Constraints imposed by both the mixture-of-mixtures structure and other practical considerations add further complexity. The authors propose a new model called the major-minor model to overcome some of the limitations of the commonly used multiple-Scheffé model. A strategy is developed for designing experiments that are much smaller in size than those based on the existing methods.

In “Seasonal Dynamic Factor Analysis and Bootstrap Inference: Application to Electricity Market Forecasting,” Andrés M. Alonso, Carolina García-Martos, Julio Rodríguez, and María Jesús Sánchez develop a novel model that combines factor analysis and time series to predict Spanish electricity prices. Electricity’s special features (nonstorability and instantaneous response to demand) are responsible for largely unpredictable price behavior. Accurate forecasts address a problem of national importance and enable the appropriate scheduling of generation units. The authors propose the seasonal dynamic factor analysis (SeaDFA), accomplishing dimension reduction in vectors of time series so both common and specific components are extracted, accounting for regular dynamics and seasonality.

In studies in which data are generated from multiple locations or sources, anomalous observations are not uncommon. Motivated by the application of establishing a reference value in an inter-laboratory setting with outlying labs, Garritt Page and David Dunson propose “Bayesian Local Contamination Models for Multivariate Outliers.” The local contamination model flexibly accommodates unusual multivariate realizations. The proposed method models the process level of a hierarchical model using a mixture with a parametric component and a possibly nonparametric contamination. Considerable flexibility is achieved by allowing varying random subsets of the elements in the lab-specific mean vectors to be allocated to the contamination component.

Weapons stockpiles are expected to have high reliability over time, but prudence demands regular testing to rule out the possibility of detrimental aging effects. That is, one must keep watch for unexpected degradations to maintain confidence that reliability is high. In “A Random Onset Model for Degradation of High-Reliability Systems,” Scott Vander Wiel, Alyson Wilson, Todd Graves, and Shane Reese present a model for a stockpile in which initially high reliability could begin to decline at any time. Each year presents a small chance that degradation begins and continues at a fixed but uncertain rate. Under these conditions, ongoing testing is imperative to maintain confidence that reliability remains high. The model provides a framework for answering questions about the effects of reduced sampling, providing managers with an assessment of how confidence will be affected if the surveillance rate is decreased to save money.

In “Blocked Designs for Experiments with Non-Normal Response,” David Woods and Peter van de Ven develop efficient blocked designs for nonstandard response models. The paper presents the first general methods for exponential family responses described by a marginal model fitted via generalized estimating equations. This methodology is appropriate when the blocking factor is a nuisance variable, as often occurs in industrial experiments. A D-optimality criterion is developed for finding designs robust to the values of the marginal model parameters and applied using two strategies: unrestricted algorithmic search and blocking of an optimal design for the corresponding generalized linear model. Designs from each strategy are shown to be more efficient than designs that ignore blocking.

In recent years, a great deal of effort has been invested in developing sensors to detect, locate, and identify “energetic” electromagnetic events based on imaging spectrometer data. In “Modeling Spectral-Temporal Data from Point Source Events,” Monica Reising, Max Morris, Stephen Vardeman, and Shawn Higbee discuss model building for spectral-temporal data of this type. It is imperative in military applications to quickly identify particular characteristic patterns of evolution over time. While physical sensor technology is developing rapidly, there is a lag in the development of algorithms that can be used to identify and discriminate between types of energetic events in real time. The models developed in this paper are a first step to narrowing the data-algorithm gap.

The issue closes with an interesting combination of application and methods. Partha Sarathi Mukherjee and Peihua Qiu develop “3-D Image Denoising by Local Smoothing and Nonparametric Regression,” responding to increased availability of 3-D images from magnetic resonance imaging (MRI), functional MRI (fMRI), and other sources Removal of noise from observed 3-D images can substantially improve subsequent image analyses. The complex structure of 3-D images makes direct extensions of 2-D denoising methods inefficient. For instance, edge locations are surfaces in 3-D cases, which are much more challenging to handle. A strength of the method is its ability to preserve edges and major edge structures such as intersections of two edge surfaces, pyramids, and pointed corners.