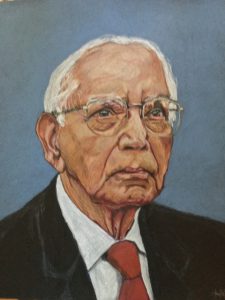

C. R. Rao’s Foundational Contributions to Statistics: In Celebration of His Centennial Year

B.L.S. Prakasa Rao, C. R. Rao Advanced Institute of Mathematics, Statistics, and Computer Science; Randy Carter, University at Buffalo; Frank Nielsen, Sony Computer Science Laboratories, Inc.; Alan Agresti, University of Florida; Aman Ullah, University of California, Riverside; and T.J. Rao, Indian Statistical Institute

Editor’s Note: This article draws from a 2014 piece by B.L.S. Prakasa Rao, “C.R. Rao: A Life in Statistics,” for the series Living Legends in Indian Science published in Current Science.

Calyampudi Radhakrishna Rao was born on September 10, 1920, in Hadagali, which is in the current State of Karnataka, India. He grew up with six brothers and four sisters in a comfortable family environment created by his mother, A. Laxmikanthamma, and father, C.D. Naidu.

A Chance Introduction to His Last Resort

It seems ironically appropriate that a living legend of statistics would enter the discipline by chance. That was the way it happened for C.R. Rao. He completed work for an MA in mathematics at Andhra University in 1940 at age 19. He then wanted to pursue a career in mathematics, but his application for a research scholarship was rejected on the grounds that it was received after the deadline. So, he applied for a job as a mathematician for the army survey unit during World War II. Rao went to Calcutta for an interview, but the job eluded him. By chance, he met a man in Calcutta who was taking a training course at the Indian Statistical Institute (ISI), which was founded in 1931 by P.C. Mahalanobis but was unknown to Rao. He applied for admission to the one-year training program in statistics hoping the additional qualifications would land him a job in the future. It was a last resort for Rao that turned into a godsend for Mahalanobis, who promptly accepted the application, thus launching Rao on his life’s work.

Design of Experiments

Rao entered the training program at ISI in January of 1941 but left it in mid-course to join the newly formed master’s program in statistics at Calcutta University. He graduated with an MA in 1943. Now, with two master’s degrees, he was given the position of research scholar at ISI and a part-time job at Calcutta University to teach a course in statistics, a position he held until 1946.

During his master’s studies, Rao published nine papers: eight on experimental design, six as co-author with K.R. Nair, and two independently. Over the next two years, he co-authored four mathematics papers with R.C. Bose and S. Chowla on combinatorics, group theory, and number theory, gaining mathematical knowledge that complemented his expertise in experimental design.

With this combined knowledge base, Rao developed the theory and application of orthogonal arrays. He introduced the concept in his master’s thesis and developed it in two 1946 papers: “Difference Sets and Combinatorial Arrangements Derivable from Finite Geometries” in the Proceedings of the National Institute of Science and “Hypercubes of Strength ‘d’ Leading to Confounded Designs in Factorial Experiments” in the Bulletin of the Calcutta Mathematical Society. This line of research culminated in the general definition and presentation of the application and theory of orthogonal arrays in papers in 1947 and 1949: “Factorial Experiments Derivable from Combinatorial Arrangements of Arrays” in Journal of the Royal Statistics Society and “On a Class of Arrangements” in Proceedings of Edinburgh. The profound importance of this work is noted in the preface of the Orthogonal Arrays: Theory and Applications, published by Springer-Verlag in 1999.

– A.S. Hedayat, N.J.A. Sloane, and J. Stufken

A Most Remarkable Paper

Rao’s truly remarkable Bulletin of the Calcutta Mathematical Society 1945 paper, “Information and Accuracy Attainable in the Estimation of Statistical Parameters,” made two foundational contributions to statistical estimation theory and broke ground on the geometrization of statistics, which later provided a cornerstone of the interdisciplinary field of information geometry. The paper was included in the book Breakthroughs in Statistics Vol. 1, 1890–1990.

Estimation Theory

The paper opened new areas of research on estimation theory and produced two important results bearing Rao’s name: the Cramér-Rao inequality and Rao-Blackwell theorem, which gave rise to the term Rao-Blackwellization. These results were foundational breakthroughs and are included in virtually all textbooks on mathematical statistics.

The results had a profound effect on other disciplines, as well. For example, in his 1998 book Physics from Fisher Information: A Unification, B. Roy Frieden writes, “The Heisenberg Uncertainty Principle is an expression of the Cramér-Rao Inequality of classical measurement theory, as applied to position determination.” Quantum physicists derived what is called the Quantum Cramér-Rao Bound (1998), which provides a sharper version of the Heisenberg Principle of Uncertainty. Rao-Blackwellization improves the efficiency of an unbiased estimator when a sufficient statistic exists.

Results obtained by other authors based on Rao’s 1945 paper and named after Rao are Global (Bayesian) Cramér-Rao Bound (1968), Complexified and Intrinsic Cramér-Rao Bound (2005), Rao-Blackwellized Particle Filters (1996), Stereological Rao-Blackwell Theorem (1995), Rao-Blackwell versions of cross validation and nonparametric bootstrapping (2004), and Rao Functionals (1988).

Rao-Blackwellization now plays an important role in computational statistics, with recent major developments in stochastic simulation methods such as Markov Chain Monte Carlo.

Geometrization of Statistics

In the same 1945 paper, Rao proposed a differential geometric foundation for statistics by introducing a quadratic differential metric in the space of probability measures. Historically, Rao was working in 1943 at the ISI under the guidance of P. C. Mahalanobis, who asked him to perform cluster analysis of several castes and tribes based on anthropometric measurements of individuals. Mahalanobis initially suggested the use of his D-squared distance, but this work motivated Rao to investigate more general notions of distance. He introduced Riemannian differential geometry for modeling the space of probability distributions using Fisher information as a Riemannian metric—the so-called Fisher-Rao metric. The induced geodesic distance between two probability distributions yielded a distance metric called Rao’s distance. This work has become one of the most active and important topics in information science over the last 25 years, connecting statistics, information theory, control theory, and statistical physics. It is fundamental to the multidisciplinary field of information geometry, which is increasingly important in information sciences, machine learning, and AI.

For more about Rao’s 1945 paper, read the chapter by P.K. Pathak introducing it and a reproduction of the original article, both in Breakthroughs in Statistics, Vol. I.

The Score Test

Rao has done much to advance knowledge in theory and practice of statistical inference. His book Linear Statistical Inference and Its Applications has been cited nearly 17,000 times. His most acclaimed single piece of work in this area, however, is what is now known as Rao’s score test.

During his time as a research scholar at ISI, Rao was approached by a scientist named S.J. Poti, who asked for help in testing a one-sided alternative hypothesis (θ > θ0). Rao set to work to develop a test that was most powerful for alternatives close to θ0. He and Poti published a paper, titled “On Locally Most Powerful Tests When the Alternatives Are One Sided” in Sankhya in 1946. Rao continued working on the problem after he was sent to Cambridge later in 1946 by Mahalanobis and published his seminal paper, “Large Sample Tests of Statistical Hypotheses Concerning Several Parameters with Applications to Problems of Estimation.” This article became his second to be included in Breakthroughs in Statistics, Vol. III.

Rao followed this publication with several relevant articles. For example, in 1961, he showed how to construct a score-type statistic for comparing two nested multinomial models. It is now known that many of the most-used significance tests for categorical data analysis are score tests. These include McNemar’s test, the Mantel-Haenszel test, the Cochran-Armitage test, and Cochran’s test. Other score tests include a test of normality based on skewness and kurtosis proposed by R. A. Fisher and Karl Pearson in 1930, tests based on the partial likelihood for Cox’s proportional hazards model, and locally most powerful rank tests in nonparametric statistics. Rao’s test has had an immense effect on econometric inference and is now the most common method of testing used by econometricians.

The score test began to receive increased attention as the foundation for the development of hypothesis tests when nonlikelihood-based estimation methods such as generalized estimating equations became popular in the 1980s. Deficiencies in Wald-type tests in such settings were observed and, consequently, a flurry of development based on Rao’s score test occurred, particularly in econometrics and biostatistics.

Multivariate Theory and Applications

In November of 1946, Rao went to Cambridge to analyze measurements from human skeletons brought from Jebel Maya in North Africa by the University Museum of Archeology and Anthropology. His task at the museum was to trace the origin of the people of Jebel Maya using the Mahalanobis D-square statistic. After his day job at the museum, Rao would spend his evenings in Fisher’s genetics laboratory mapping the chromosomes of mice. More notably, he was Fisher’s one and only PhD student and completed his dissertation, “Statistical Problems of Biological Classification,” in 1948. The dissertation was read by John Wishart.

In the following three papers related to his dissertation, Rao laid the foundation for the modern theory of multivariate methodology:

- “Tests with Discriminant Functions in Multivariate Analyses,” Sankhya 7 (1946)

- “Utilization of Multiple Measurements in Problems of Biological Classification,” Journal of the Royal Statistical Society Series B (1948)

- “Tests of Significance in Multivariate Analysis,” Biometrika 35:58–79 (1948)

He continued to make contributions in this area throughout his career and, 41 years later, was awarded the Samuel S. Wilks Memorial Medal, in part for major contributions to the theory of multivariate statistics and applications of that theory to problems of biometry. In 2002, Rao was awarded the National Medal of Science for pioneering contributions to the foundations of statistical theory and multivariate statistical methodology and their applications.

In conclusion, we would like to leave readers with an appreciation for Rao’s place in history. He is one of 77 contemporary scientists in all fields listed in Mariana Cook’s book, Faces of Science, and among a galaxy of 57 famous scientists of the 16th through the 20th centuries listed in the “Chronology of Probabilists and Statisticians” compiled by M. Leung at The University of Texas, El Paso. He produced 14 books (two of them translated into several languages), 475 research papers, 51 PhD students, and 42 edited volumes of the Handbook of Statistics. He received 38 honorary doctorates from universities in 19 countries spanning six continents. His special awards include the National Medal of Science, USA; India Science Award; and the Guy Medal in Gold, United Kingdom. Finally, results that bear his name include the Cramér-Rao bound, Rao’s Score Test, Rao-Blackwell Theorem, Rao-Blackwellization, Fisher-Rao Theorem, Rao’s Theorem, Fisher-Rao Metric, Rao Distance, Neyman-Rao Test, Rao least squares, IPM Methods, Rao’s covariance structure, Rao’s U Test, Rao’s F Test, Rao’s Paradox, Rao-Rubon Theorem, Lau-Rao-Shanbhag Theorems, Rao-Yanoi Inverse, Kagan-Linnik-Rao Theorem, and Fisher-Rao Theorem.

Deeper Dive

For broader coverage of Rao’s life and his many academic accomplishments and honors, visit the following webpages:

C.R. Rao Advanced Institute of Mathematics, Statistics, and Computer Science (AIMSCS)

“About C.R. Rao,” by Marianna Bolla

Nice paper to honor one of the most important and influencial statisticians of all times. No doubt in my mind that his works are among the most original and creative in statistcs history, they are, for me, pieces of art!!

Nice comprehenive article. If practitioners want to learn more about Rao’s contributions while using R programs, my recent paper: H. D. Vinod

“Software-illustrated explanations of Econometrics Contributions by CR Rao for his 100-th birthday” (DOI: 10.1007/s40953-020-00209-9), Journal of Quantitative Economics, vol. 18(2), 2020, pp.235-252.

may be of interest.

Welcome!

Amstat News is the monthly membership magazine of the American Statistical Association, bringing you news and notices of the ASA, its chapters, its sections, and its members. Other departments in the magazine include announcements and news of upcoming meetings, continuing education courses, and statistics awards.

ASA HOME

Departments

Archives

ADVERTISERS

PROFESSIONAL OPPORTUNITIES

FDA

US Census Bureau

Software

STATA

QUOTABLE

“ My ASA friendships and partnerships are some of my most treasured, especially because the ASA has enabled me to work across many institutional boundaries and

with colleagues from many types of organizations.”

— Mark Daniel Ward

Editorial Staff

Managing Editor

Megan Murphy

Graphic Designers / Production Coordinators

Olivia Brown

Meg Ruyle

Communications Strategist

Val Nirala

Advertising Manager

Christina Bonner

Contributing Staff Members

Kim Gilliam